Over the past few days, I’ve received more than 20 messages asking about the ML certifications journey. So, I thought it might be helpful to share my own learning path with everyone. Please keep in mind that this is my personal story. What I found easy, you might find challenging. And what you breeze through; I may have struggled with. And that’s perfectly okay. It just means we have different strengths, and part of the journey is learning where we need to focus a little more.

One of the most common questions I get is: “Do I need to know math to do machine learning?”

Now, I might be a bit biased here . I have a BSc in Mathematics and completed an intensive mathematics college program (the equivalent of sixth form in the UK). Because of this, I didn’t need to study math specifically for this certification. I already had a strong foundation.

But if you’re coming from a different background, you might find it helpful to revisit some core concepts. That said, it’s important to distinguish between two different roles in the ML space:

- Machine Learning Engineers focus on building, deploying, and scaling models.

- Data Scientists focus on model creation, and they typically need a deeper understanding of algorithms and statistics , many come from academic backgrounds and often hold PhDs.

This isn’t meant to spark a debate , just to help clarify things. If you’re aiming for the ML Engineer track, don’t be discouraged if you don’t have a PhD or haven’t studied advanced statistics. You do not need to be a rocket scientist to get into ML. There’s a lot you can learn on the job or through structured learning paths like certifications.

Where Should You Start with Machine Learning on Databricks?

If you’re thinking about diving into Machine Learning on Databricks, I’d highly recommend starting with the Databricks Data Engineering learning path.

Why? Because having a solid foundation ( like the Medallion Architecture, Delta Live Tables (DLT), and query optimization ) will make your life much easier when you move on to ML-specific topics like feature engineering. You’ll be able to answer those questions quicker and with more confidence because you’ll already understand how the data is transformed under the hood.

Now, this doesn’t mean you need to take the Data Engineer certification ( unless you want to!). My recommendation is to start with Databricks Academy, where you’ll find online courses that are:

- Free for everyone

- Structured along clearly defined paths

- Mapped to specific roles and skills (ML, DE, DA, etc.)

Databricks runs periodic Learning Festivals where courses (including labs) are made freely available for a limited time, sometimes with certification discounts too.

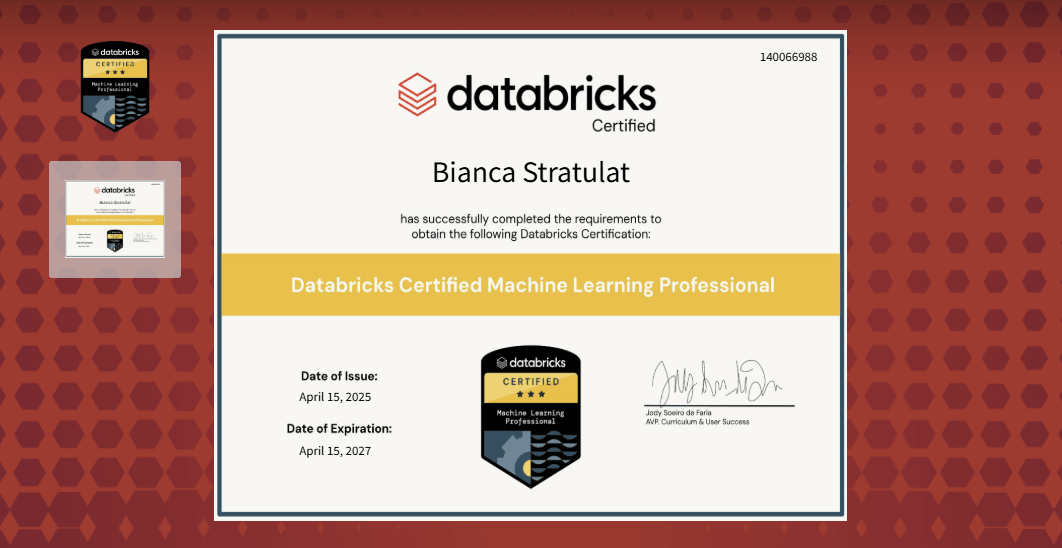

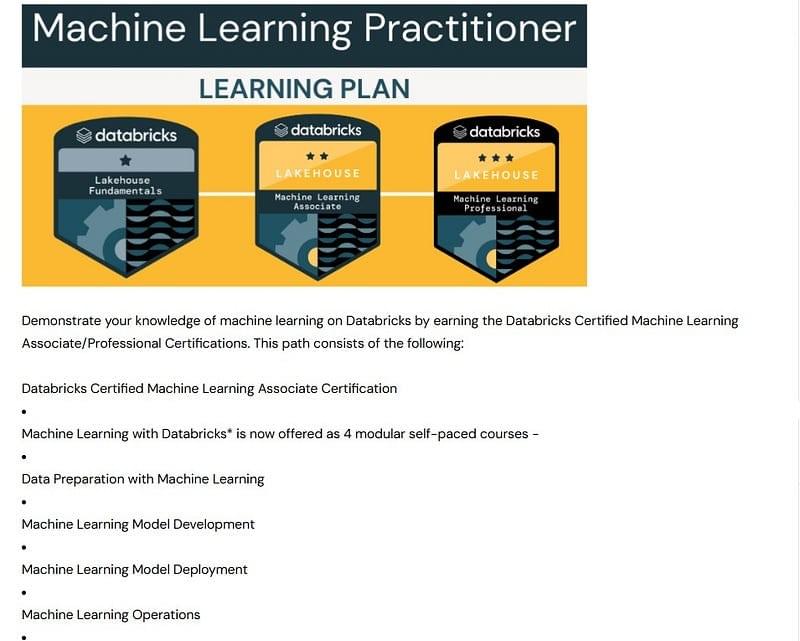

The Machine Learning certification itself is structured into four self-paced modules:

- Data Preparation with Machine Learning

- Machine Learning Model Development

- Machine Learning Model Deployment

- Machine Learning Operations (MLOps)

Each module includes practical labs, and together they form the backbone of the Databricks Certified Machine Learning Associate certification. It’s a great entry point into applied ML with Databricks.

Why You Really Need to Learn Spark and MLflow

If you’re coming from a Data Engineering background, you’re probably used to doing most transformations with SQL and for many DE certifications, that works just fine. You can write Delta Live Tables (DLTs), manage pipelines, and handle data ingestion with little to no PySpark.

But once you step into the world of Machine Learning and MLOps, things change.

You need to know Spark.

Why? Because ML workflows operate on distributed data. Preprocessing large datasets, vectorizing features, training models at scale , these all rely on Spark under the hood. And in Databricks, you’ll be using libraries like pyspark.ml to construct ML pipelines, transform data, and optimize models.

That’s not just theory , it’s what the Databricks ML exams expect you to know. You’ll encounter:

- Spark DataFrame syntax and logic

- Feature engineering using VectorAssembler, StringIndexer, and Pipeline stages

- Hyperparameter tuning using CrossValidator and TrainValidationSplit

- MLflow functions like mlflow.log_param() and mlflow.start_run()

- Model tracking and deployment in Unity Catalog

- End-to-end workflows using MLflow Projects and Databricks Workflows

These aren’t just checkbox topics , they’re real-world skills used in production-grade ML systems.

SQL Is Powerful, But It Won’t Get You All the Way

Don’t get me wrong , SQL is brilliant. It’s readable, powerful, and excellent for curating layers in the Medallion Architecture with DLT. But when you’re diving into:

- Custom, non-tabular transformations

- Feature vector assembly and label encoding

- Advanced pipeline orchestration

- Model versioning, lifecycle management, and deployment

…you’ll need Python, Spark, and MLflow working in harmony.

If you rely solely on SQL, you’ll quickly hit a wall when building ML pipelines or deploying models in a real MLOps setup.

Build Depth, Not Just Pass Marks

The most important lesson in this journey?

Don’t just aim to pass — aim to understand.

Spark and MLflow aren’t just “exam topics”, they are the foundation of scalable, production-ready ML systems. Investing time to learn how Spark executes, how MLflow manages models, and how both interact with Unity Catalog will pay off far beyond any certification badge.

And remember: the best engineers aren’t the ones who memorise documentation. They’re the ones who build, break, and fix things. Who understand not just what works, but why it works and how to make it better.

So go hands-on. Spin up a dev workspace. Practice writing and debugging PySpark code. Track experiments with MLflow. Deploy models across environments. And yes , make mistakes. That’s how you learn.

#From BiancaDoesData to BiancaDoesML — How I Prepared

Surprise or not… I don’t believe you become an expert just by completing a certification. Studying gives you knowledge , but expertise is built through hands-on experience and delivering real projects.

That said, I didn’t just rely on the online materials. I dedicated time to reading and implementing examples from two fantastic books that I highly recommend:

- 📘 Designing Machine Learning Systems — O’Reilly

- 📗 Practical Machine Learning on Databricks — Debu Sinha

These books helped me connect theory with practical application and taught me a lot about real-world system design and workflows.

One of the best decisions I made was to create my own Databricks environment. I set up:

- A single metastore

- Three workspaces: dev, test, and prod

💡 Pro tip: Use a small compute for your workflows, and always set your cluster to terminate after 30 minutes of inactivity , your wallet will thank you later!

Why bother doing all this? Because the exam includes syntax-related questions on Spark and MLflow and I know I’m repeating myself here, but it’s worth driving home. Especially at the ML Professional level, the majority of questions revolve around these two areas. You’ll need to get comfortable writing, debugging, and running code. And there’s no substitute for hands-on practice.

These environments also help you simulate model deployment scenarios — super valuable for both the certification and real-life ML pipelines.

Another tip: Use AI tools (yes, even ChatGPT!) to help explain code snippets or error messages you don’t understand. This kind of self-guided learning accelerates your growth like nothing else.

Passion Projects > Practice Tests

One of the most powerful ways to cement your learning is by working on something that truly excites you. Find a passion project , something you care about and treat it like a real ML use case.

- Create your own datasets (or use open ones)

- Clean and prepare your data

- Perform feature engineering

- Train and compare models

- Register everything in Unity Catalog

- Deploy your model and build a compelling data story

That’s where the magic happens. That’s where you start building true confidence and capability.

Now, did I try any mock exams? Yes , I bought a couple of random ones online. But honestly? I don’t recommend them. The questions didn’t reflect the actual certification and didn’t offer much value. Focus on building practical skills instead.

Because in the end, it’s not just about the certification.

It’s about the learning journey.